As AI continues transforming industries, Apple has introduced Apple Intelligence, bringing new capabilities to its devices. Meta’s Llama model offers developers more flexibility, while Salesforce’s Industries AI provides tailored solutions across sectors like healthcare and automotive. Alongside these advancements, Pinecone’s vector database enables faster, scalable AI applications. Let’s break down what these innovations mean.

It’s Glowtime: Apple Intelligence Revealed

Apple’s September event, aptly titled “It’s Glowtime,” brought us the long-awaited reveal of the iPhone 16 lineup, but it didn’t stop there. This year’s event wasn’t just about hardware—Apple also showcased its entrance into the world of AI with the announcement of the Apple Intelligence specifics. After months of speculation, the tech giant finally lifted the curtain on its take on artificial intelligence, and the implications are big.

Apple Intelligence: What It Is and What It Means

Back in June, Apple hinted at its plans during WWDC 2024, introducing Apple Intelligence. Referred to as “AI,” Apple Intelligence is not a standalone product but an AI-powered enhancement of existing Apple features. From Writing Tools integrated into apps like Mail and Messages to custom emoji generation (dubbed Genmojis), Apple’s approach is all about making AI practical and user-friendly. Think of it as AI for the everyday user, seamlessly woven into your Apple devices without the need for extra apps or tools.

When and Who Gets It?

The big news is that Apple Intelligence will roll out in beta this October in the U.S., with full functionality available for a select group of devices, including the iPhone 15 Pro and iPhone 15 Pro Max. Localized versions will launch in several English-speaking countries by December, with French, Spanish, Chinese, and Japanese coming in 2025. Notably, only devices powered by the A17 Pro chip or later can run the platform, meaning not all iPhone 15 models will have access to Apple Intelligence.

A Fresh Take on Siri

One of the most exciting updates is the overhaul of Siri. Apple’s smart assistant will now be more deeply integrated with apps and offer contextual answers based on your actions. From editing photos to composing emails, Siri will deliver a much smoother, on-screen experience. It’s the AI-driven upgrade Siri fans have been waiting for.

Apple’s Small-Model AI Approach

Unlike other generative AI platforms, Apple is taking a more conservative, in-house approach to data training. The company has focused on compiling specific datasets for tasks like composing an email or generating images, which means many of these tasks can be performed on a device. For more complex operations, Private Cloud Compute will handle processing, ensuring that Apple maintains its strong stance on privacy.

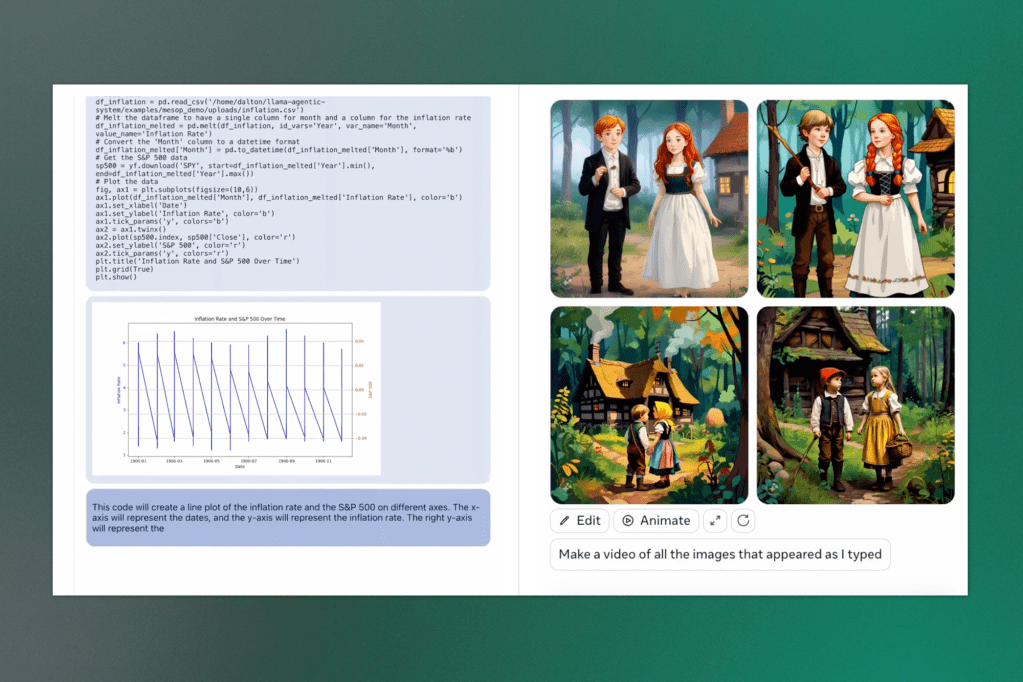

Meta Llama: Everything You Need to Know About This Open Generative AI Model

Meta has officially entered the generative AI race with Llama, a model that stands out for its open approach. Unlike competitors like OpenAI’s GPT-4 or Google’s Gemini, which are often restricted to API access, Llama is designed to give developers more freedom by allowing them to download and customize the model as needed. Here’s what makes Meta Llama unique and why it’s generating so much attention in the AI community.

What Is Llama?

Llama is a family of models, with the latest being Llama 3.1, which includes the 8B, 70B, and 405B versions. The smallest models (8B and 70B) are designed to run efficiently on laptops and servers, while the massive 405B model is reserved for data centers. One standout feature is Llama’s 128,000-token context window, which allows it to handle large documents and complex queries without losing track of previous information—think about reading and summarizing a 300-page book like Wuthering Heights all at once.

One of Llama’s most striking features is its 128,000-token context window, which allows it to handle extremely long pieces of text—up to 300 pages—without losing track of information, making it highly effective for analyzing and summarizing complex documents.

What Can Llama Do?

Like other large language models, Llama can handle various tasks, from coding assistance to answering math problems and summarizing documents. It can also interact with third-party tools and APIs, such as Wolfram Alpha for math and science queries and a Python interpreter for code validation. However, unlike some competitors, Llama can’t yet generate or process images, though Meta has hinted that this feature could be added soon.

Where Is Llama Available?

Llama powers Meta AI’s chatbot experiences across Facebook Messenger, WhatsApp, and Instagram, making it accessible to many users. For developers, Llama is available on several major cloud platforms, including AWS, Google Cloud, and Microsoft Azure. Meta has also partnered with over 25 tech companies to offer additional tools and services that enhance Llama’s capabilities, making it easier to fine-tune and deploy across various environments.

Safety Features and Limitations

Meta has introduced a suite of tools to enhance Llama’s safety, including Llama Guard, which moderates harmful or problematic content, and Prompt Guard, which defends against malicious inputs. There’s also CyberSecEval, a tool that assesses the cybersecurity risks associated with using Llama in apps or services. However, like all AI models, Llama has some risks, particularly in copyrighted content and buggy code generation, so it’s important to use it cautiously.

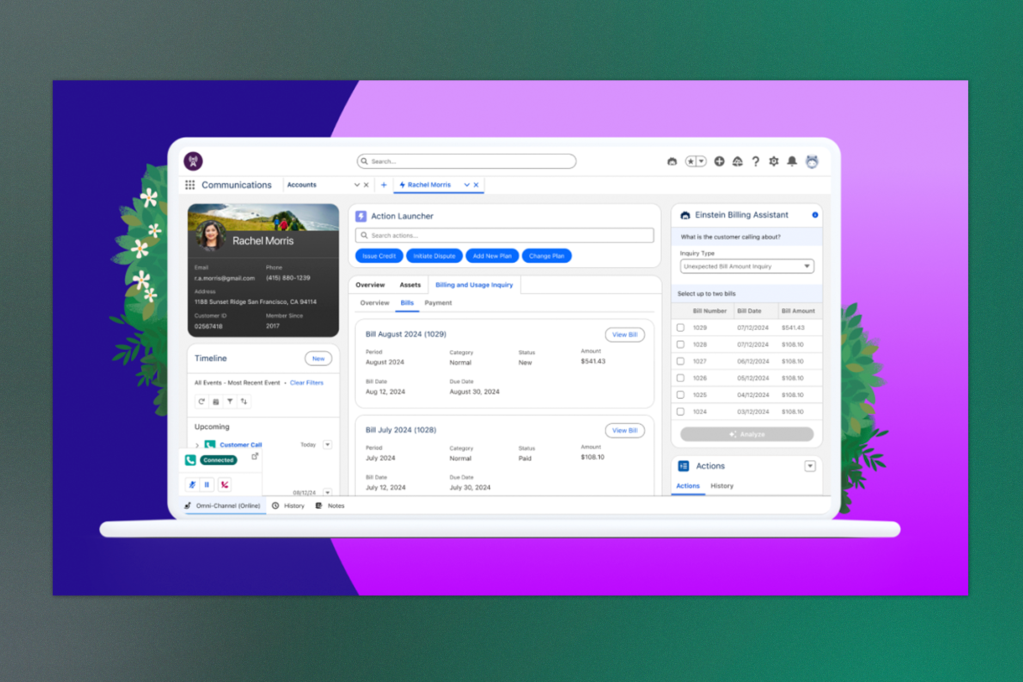

Salesforce Launches Industries AI: Over 100 AI-Powered Solutions for 15 Industries

This week, Salesforce introduced Industries AI, a groundbreaking initiative to deliver over 100 industry-specific AI capabilities across 15 different sectors. With AI now embedded into every Salesforce industry cloud, customers from industries like healthcare, education, manufacturing, and public sector organizations can leverage tailored AI tools to tackle their unique challenges—straight out of the box.

Industries AI is designed to address industry-specific pain points by automating tasks such as optimizing consumer goods inventory, increasing student recruitment for educational institutions, and providing proactive maintenance alerts for vehicles and machinery in the automotive sector. The platform is backed by Salesforce’s Data Cloud and supported by the Einstein Trust Layer, ensuring data security and privacy. For businesses without the time or resources to build their own AI models, Salesforce’s pre-built AI capabilities offer a plug-and-play solution to drive productivity and value.

Key AI Capabilities Across Industries

Salesforce has made it easy for organizations to get started with Industries AI by launching the AI Use Case Library, which features 100+ AI use cases. Here’s a look at some of the most promising features:

– Healthcare: AI-driven Patient Services & Benefits Verification can now quickly summarize patient histories, care plans, and insurance coverage to accelerate time to care.

– Financial Services: Complaint Summaries use generative AI to summarize customer interactions, helping agents resolve complaints faster.

– Automotive: The Vehicle Telemetry Summary monitors vehicle performance and offers maintenance recommendations, helping service agents ensure vehicle safety.

– Public Sector: Application History uses AI to provide a full overview of an applicant’s request for benefits, streamlining case management for government agencies.

– Education: Recruitment Inquiry & Opportunity Management helps colleges and universities better engage prospective students with AI-driven responses and automated staff assignments.

AI Agents to Boost Efficiency Across Workflows

Salesforce is also working on Agentforce, AI-driven virtual agents that can autonomously perform industry-specific business tasks around the clock. These AI agents can seamlessly hand off more complex cases to human agents with full context, ensuring that work gets done at scale and with precision.

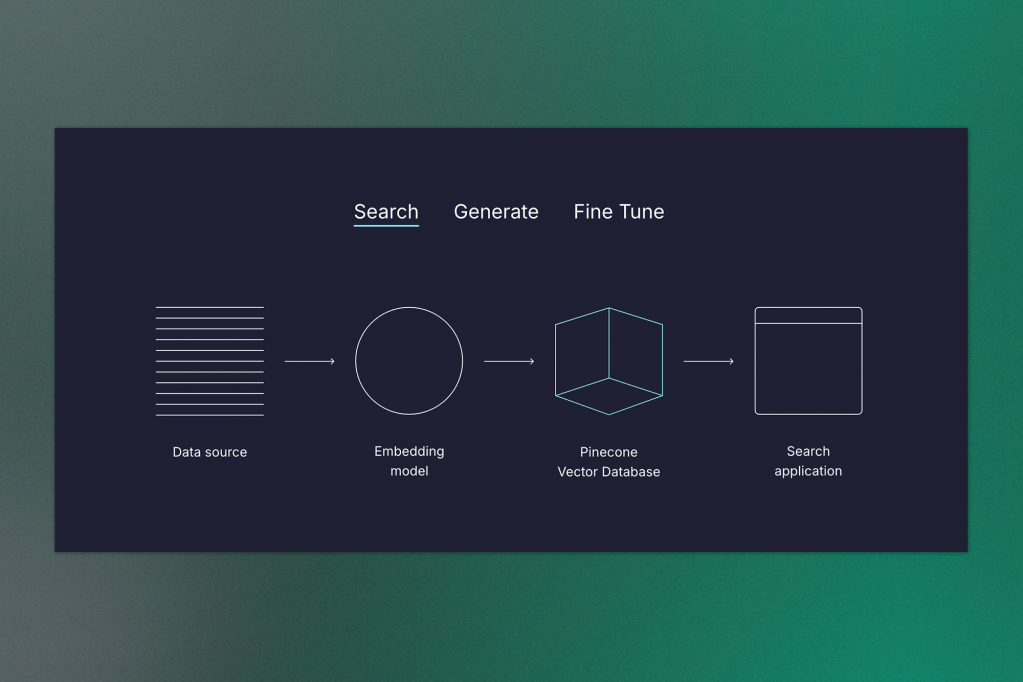

Weekly Tech Highlight: Pinecone

This week’s highlight focuses on Pinecone, the vector database behind some of the world’s best AI-driven applications. Known for its speed and scalability, Pinecone enables developers to build powerful AI solutions by seamlessly managing and searching vast amounts of data. Whether you’re working with vector embeddings or looking to enhance search accuracy with real-time updates, Pinecone is leading the way for AI-driven innovation.

What Makes Pinecone Special?

At its core, Pinecone is a serverless vector database that allows you to create, scale, and search through indexes with ease. It’s designed to support applications that require fast and accurate results, thanks to features like real-time updates, metadata filtering, and hybrid search—which combines vector search with keyword boosting. For developers, this means better, more relevant results that enhance user experiences.

Speed and Scalability

With over 30,000 organizations relying on Pinecone, it’s no surprise that performance is one of its standout features. Pinecone boasts a 51ms query latency and 96% recall—all while scaling effortlessly, whether dealing with a few vectors or billions. Setting up an index takes just seconds, and you can integrate Pinecone with your favourite tools, from AWS and GCP to frameworks like Langchain and Hugging Face.

Enterprise-Ready and Secure

Pinecone meets rigorous standards for businesses concerned about security, being SOC 2 and HIPAA certified. Whether you’re working on mission-critical applications or simply exploring AI capabilities, Pinecone provides the reliability, observability, and scalability needed to grow with confidence.